How ReLens built the Vibecoding Leaderboard

Andrej Karpathy coined the term vibecoding to describe a new way of programming where you direct, iterate, and create at the speed of thought. If you are using tools like Cursor you know this flow state.

The criteria for choosing technologies has fundamentally changed. Before, you'd pick a tech because you knew it or it performed better. Now AI is part of your team and you need objective data on how well your favorite models can actually wield each technology. That's what the Vibecoding Leaderboard measures.

1. The Foundation: From Text Analysis to Code Validation

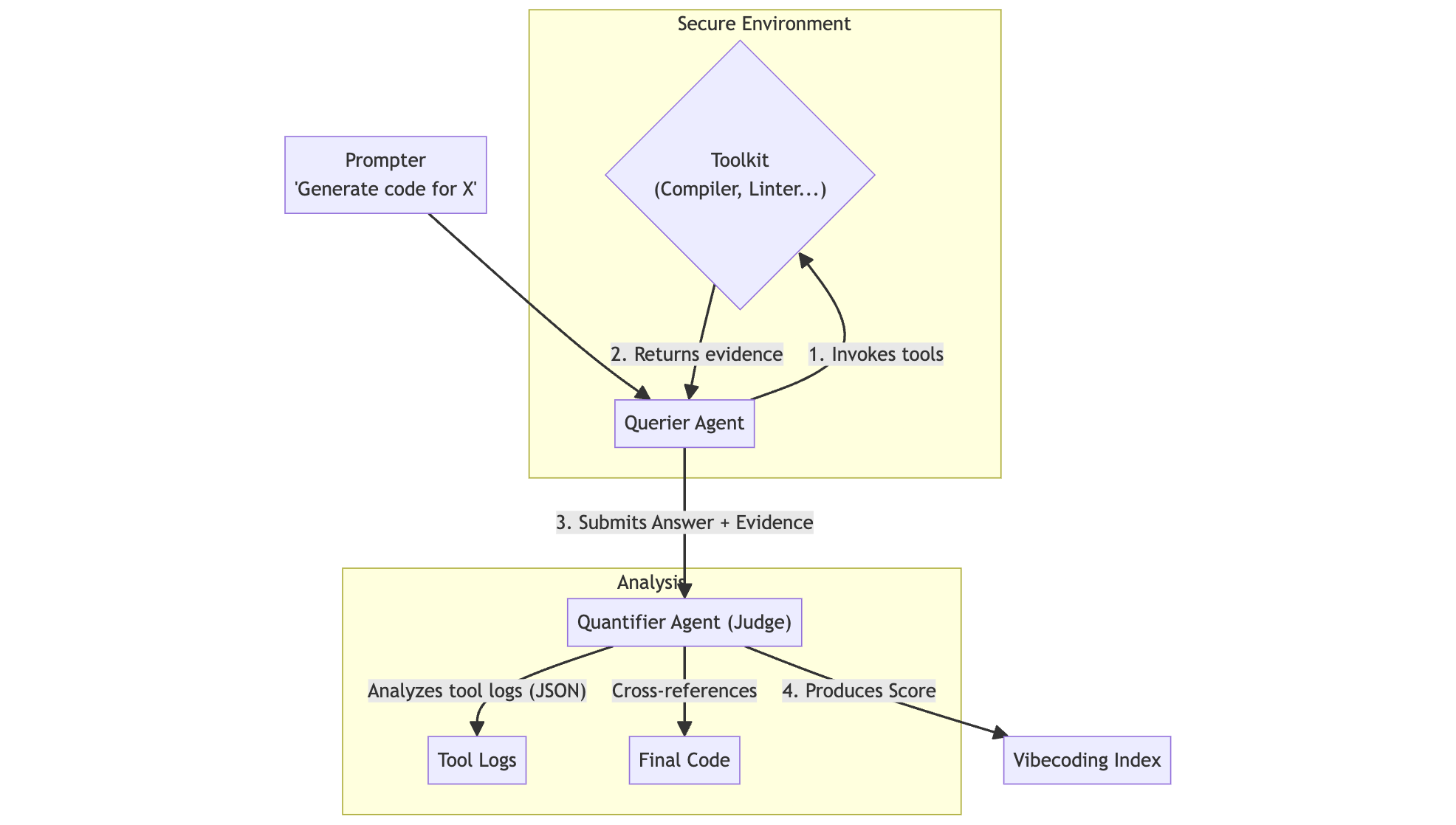

Our core engine, ReLens, was designed to survey AI agents and understand how they talk about your product (essentially measuring "SEO for LLMs"). It does this using specialized pipelines. Specifically, the understanding pipeline, moves beyond simple presence-checking; a prompter asks targeted questions, a querier collects AI responses, and a quantifier scores them on customizable criteria.

This is great for measuring what an AI says. But what about what it can do? We realized our tooling was powerful enough to answer that question. For the Vibecoding Leaderboard, we needed to go beyond words and validate working code.

2. The Agentic Leap: Giving Our Pipeline Tools

Our first evolution was to make the relens-querier agentic. We upgraded it from a simple messenger to an active agent with a toolkit that mirrors a modern developer's environment. Think of it like Cursor: it can invoke tools that confront a language server, run linters, etc. When it generates code, it doesn't just output text; it acts like a developer, using these tools in a secure Docker container to verify its work before submitting a final answer.

3. One Agent to Judge Another: The Core Innovation

The real innovation came when we also made the relens-quantifier agentic, turning it from a passive scorer into an objective judge. Because our architecture runs any tool in a secure Docker container, we can arm the quantifier with custom validation tools tailored to any task. This allows it to move beyond surface-level analysis and truly measure the quality of the querier's answer based on hard evidence from the tool logs.

Here's a fun fact from our development process: in an earlier version, we found that when the querier and the quantifier were from the same model family (e.g., both GPT-4), the quantifier would give high scores to the querier's answer simply because it liked its writing style even if the code was completely wrong. They were, in a sense, "vibing" with each other. Making our judge rely on hard evidence from tool outputs not just well-written prose was the only way to eliminate these "hallucination agreements" and get to the truth.

4. A Multi-Dimensional Evaluation Framework

So how do we grade the final result? Our quantifier agent scores each response across several key categories:

Code Compilation: Measures if the code works. Criteria include Executability and Functional Correctness.

Code Quality Support: Evaluates adherence to standards. Criteria include Idiomatic Usage and Minimal Working Example.

Security Awareness: Assesses the safety of the generated code. Criteria include Secret Management and Role & Permission Awareness.

Problem Solving Helpfulness: Evaluates how well AI can diagnose and fix real-world issues. Criteria include Issue Identification, Explanation Clarity, Accuracy of Suggestions, Version Awareness, Contextual Reasoning, and Prioritization & Relevance.

A score of 9-10 isn't for code that "looks good." It is reserved for responses that are demonstrably superior and pass every tool validation we throw at them.

Conclusion

The Vibecoding Leaderboard, then, isn't a subjective measure of a "vibe." It is the output of a rigorous, multi-step validation process where AI agents are tested with concrete, real-world tools. By moving beyond surface-level analysis to an evidence-based framework, we provide a reliable signal for developers and team leads letting you make informed decisions about which technologies are truly "AI-native" and ready for a modern development stack.

Want to see how your favorite technologies score? Check out the Vibecoding Leaderboard and discover which tools are truly AI-ready.