How UnLink Went from AI Peer Coding to Agent-Native in Under a Week

UnLink builds privacy infrastructure for blockchain. Their engineering team of three was already using Cursor and Copilot, but they weren't getting the leverage they expected. AI was helping, not transforming.

After a 4-hour bootcamp and 10 days of accountability, they made the leap to agent-native. Now they run 3-4 parallel agent sessions, shipping verified features with tests included. The bottleneck shifted from typing to reviewing.

This is their story.

The Challenge: Smart Engineers, Outdated Workflow

UnLink's team wasn't struggling with skill. They were struggling with leverage.

Their codebase spans Rust backend services, Circom ZK circuits, Solidity smart contracts, and a TypeScript SDK. Context switching between these layers was draining. Each pull request required mental gymnastics that left engineers exhausted by mid-afternoon.

The symptoms were familiar:

- Code generation tools existed, but adoption was shallow (autocomplete only)

- Developers spent more time debugging AI suggestions than benefiting from them

- Tests were written after bugs were found, not before features shipped

- Environment sync issues between local and production caused deployment headaches

Their CEO, Paul-Henry, put it bluntly:

If you just come and show slides for an hour, the developers aren't going to go install the tools. You lose people. They won't see the benefits. The friction is real.

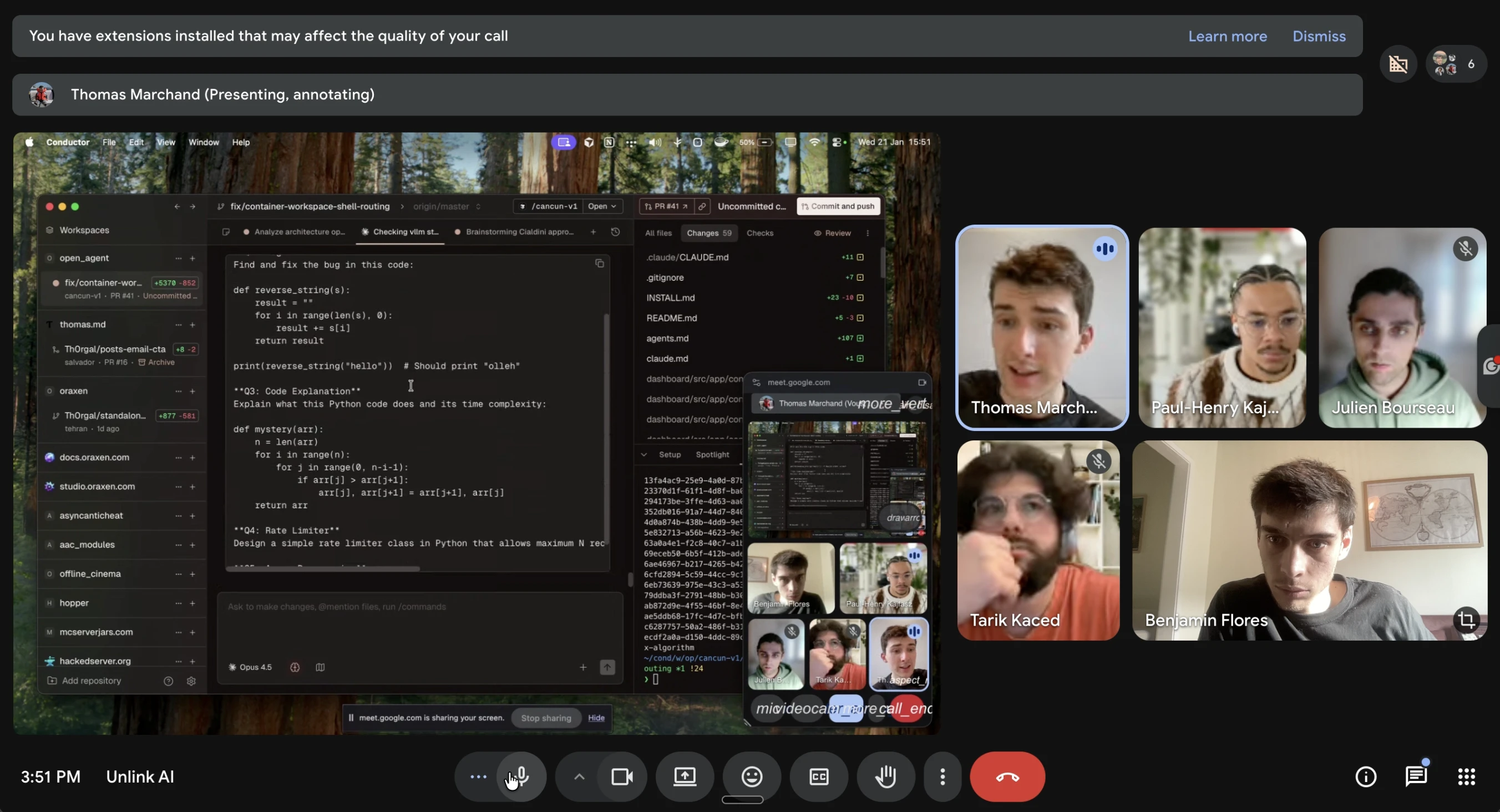

They didn't need another pitch about AI productivity. They needed someone to sit with them, validate their workflows, and prove the gains firsthand.

The Solution: High-Touch, Agent-Native Training

We designed a program specifically for teams like UnLink: a 4-hour bootcamp followed by 10 days of daily Slack accountability.

Day 1: The Foundation

The first session wasn't about tools. It was about mental models.

The core insight: The developer's job has shifted. You're no longer the typist. You're the architect who writes specifications and reviews outcomes.

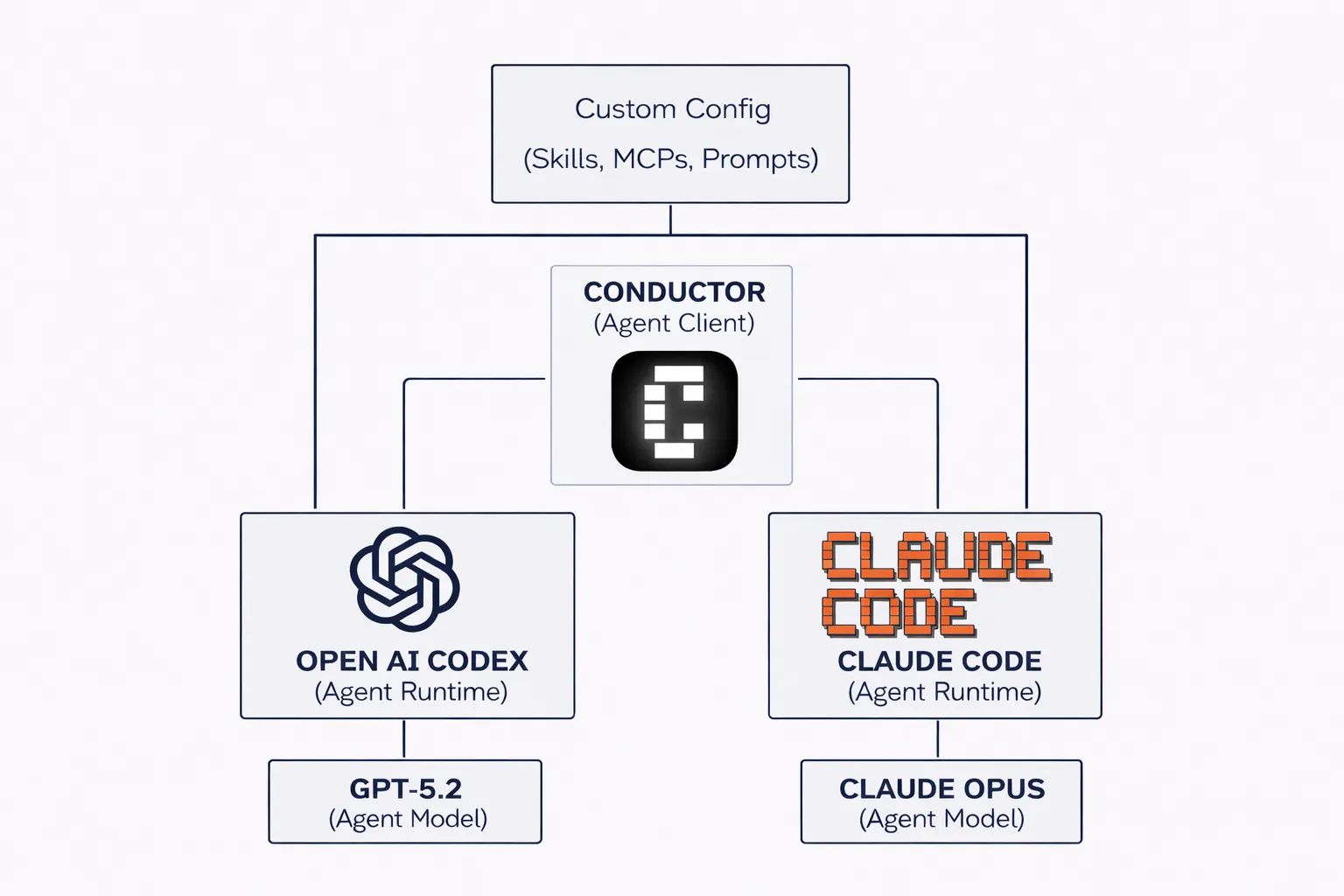

We covered the three building blocks of agent-native development:

- Model - Which AI to use and why (Claude Opus 4.5 for complex reasoning, Haiku for quick tasks)

- Runtime - The loop that turns a model into an autonomous worker

- Client - Where you interact with agents (we use Conductor for parallel orchestration)

Then came the configuration layer that makes agents actually useful:

- Context files (CLAUDE.md) - Your agent's memory of rules and patterns

- Skills - Reusable workflows triggered by keywords or commands

- MCPs - External tool integrations (GitHub, Slack, databases)

Day 2: Plan Mode and the Ralph Loop

The second session tackled the hardest part: trusting agents with complex tasks.

We introduced Plan Mode - a state where the agent explores and documents before writing any code. No more "amnesiac colleague" syndrome. The agent builds context, asks clarifying questions, and produces a plan you can review.

Then we taught the Ralph Loop - our pattern for long-running development:

while true; do

./agent_task.sh

# Process terminates after commit

# Fresh context every iteration

done

The key insight: state lives on disk, not in memory. Each commit kills the process. Fresh context prevents hallucination drift.

Julien, one of UnLink's developers, immediately saw the application:

The acceptance criteria pass, but edge cases still emerge during manual testing. If I ask the agent to prove the fix works - reproduce the bug before, show it's gone after - I remove myself from validation entirely.

Days 3-10: Daily Accountability

Every business day, I asked two questions in their Slack:

- "What did you ship with the agent today?"

- "How did you improve the workflow to make the agent more autonomous?"

This wasn't micromanagement. It was habit formation.

Week 1 highlights:

- Created CLAUDE.md with build commands, test scripts, and repo structure

- Set up first skills for code review and deployment

- Configured MCP integrations for GitHub PR management

Week 2 highlights:

- Implemented Ralph Loop for feature development

- Solved the .env sync problem using encrypted commits with dotenvx

- Built automated PR review cycle with BugBot integration

The Results: From Manual to Autonomous

Quantitative Impact

After 10 days:

- Development velocity: Features that took 2-3 days now ship in hours

- Code review cycles: Automated loop reduced human review to final approval only

- Test coverage: Shifted from reactive (post-bug) to proactive (pre-merge)

- Context switching: Developers run 3-4 parallel agent sessions instead of serializing tasks

Qualitative Transformation

The team's relationship with code changed fundamentally.

Tarik, senior engineer:

Rather than me thinking through tests upfront, I now ask the agent to prove the fix works. Reproduce the bug before, show it's gone after. This automates proof-of-correctness and removes manual validation entirely.

On environment synchronization:

Docker should create identical environments. If it doesn't, the issue is configuration and secrets, not Docker itself. We solved it with encrypted .env commits - the agent decrypts at runtime.

Paul-Henry's assessment:

The most interesting thing was seeing people who really do the work share their workflow. Having them validate your approach and come into your repo - that's what creates adoption.

Key Lessons for Engineering Teams

1. Don't Scale. Do Things That Don't Scale.

The temptation is to create documentation and let teams self-serve. It doesn't work. Friction kills adoption.

High-touch training ensures:

- Tools are actually installed and configured

- Workflows are validated against real codebases

- Developers experience the gains firsthand

One hour of pairing beats ten hours of documentation.

2. Context is the New Codebase

Your CLAUDE.md file becomes as important as your README. Every time you repeat yourself to the agent, document it. Every time a PR review catches a pattern violation, add it to context.

The agent's effectiveness compounds with context quality.

3. Design for Testability from Day One

If you can't automate testing, you become the bottleneck. AI generates code fast. Manual testing is slow.

UnLink's insight: build tooling that lets agents verify their own work. Automated proof-of-fix beats manual QA every time.

4. Fresh Context Beats Long Memory

Complex multi-phase tasks fail because context decays. The Ralph Loop pattern - terminate after each commit, restart with fresh context - prevents hallucination drift and makes debugging trivial (just inspect the git log).

Ready to make your team agent-native?

We help engineering teams make the same transition UnLink did. Book a 30-minute call and we'll walk through your current setup and show you where agent workflows can have the biggest impact.

This case study documents work completed in January 2026 with UnLink's engineering team.